import numpy as np

from matplotlib import pyplot as plt

def pad(X):

return np.append(X, np.ones((X.shape[0], 1)), 1)

def LR_data(n_train = 100, n_val = 100, p_features = 1, noise = .1, w = None):

if w is None:

w = np.random.rand(p_features + 1) + .2

X_train = np.random.rand(n_train, p_features)

y_train = pad(X_train)@w + noise*np.random.randn(n_train)

X_val = np.random.rand(n_val, p_features)

y_val = pad(X_val)@w + noise*np.random.randn(n_val)

return X_train, y_train, X_val, y_valSource code

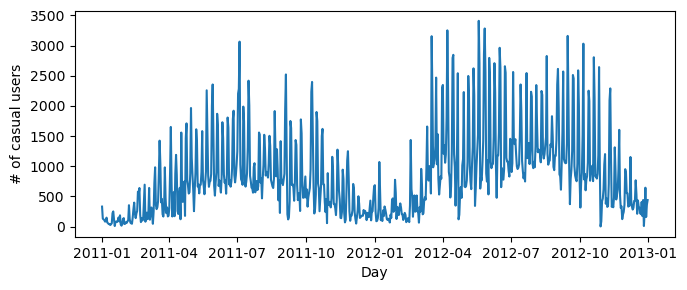

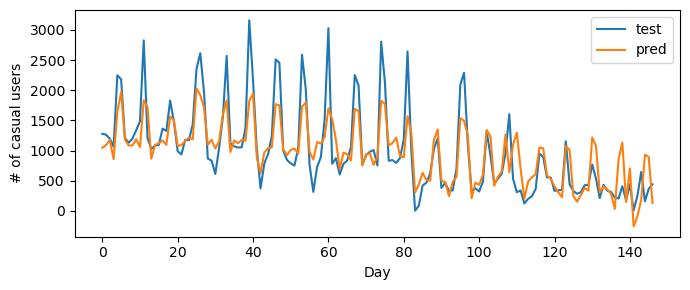

Implementing Linear Regression

In this blog post I implement linear regression in two ways: analytically, using an equation that solves for the optimal model weights, and using gradient descent, similar to the methods used in my prior logistic regression blog post. The implementation of linear regression is similar to logistic regression, but rather than calculating binary labels for a given data point, it calculates a specific value based on a data point’s features. This is what differentiates regression tasks (predicting a number) from classification tasks (predicting a label). To calculate optimal weights analytically, the following formula was used (taken from lecture notes): \[\mathbf{\hat{w}}=\mathbf{(X^TX)^{-1}X^Ty}.\] When implementing linear regression with gradient descent, the gradient was calculated with (from blog assignment description): \[\mathbf{\nabla L(w)}=\mathbf{X^T(Xw-y)},\] and used to perform weight updates as done when using gradient descent for logistic regression. Both methods produced the same weights and were able to achieve relatively high accuracies on training and validation data. Experiments were done to analyze overfitting as the number of features were increased, and LASSO regularization was employed to minimize overfitting with increasing features. Lastly, I use my linear regression model to predict trends in casual bikeshare usage in Washington DC, finding that my model is able to learn trends in bikeshare usage relatively well.

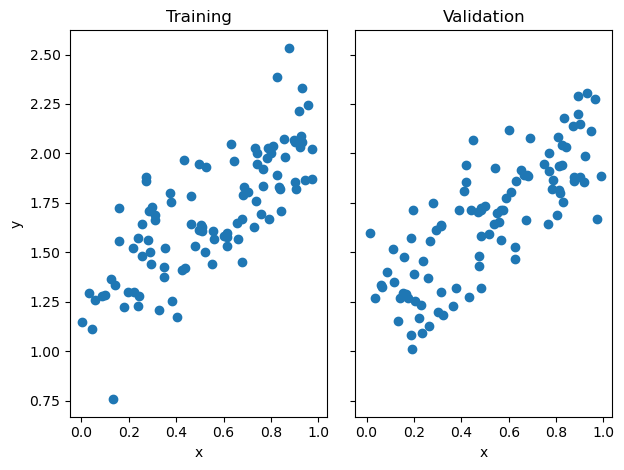

Testing Two Implementations

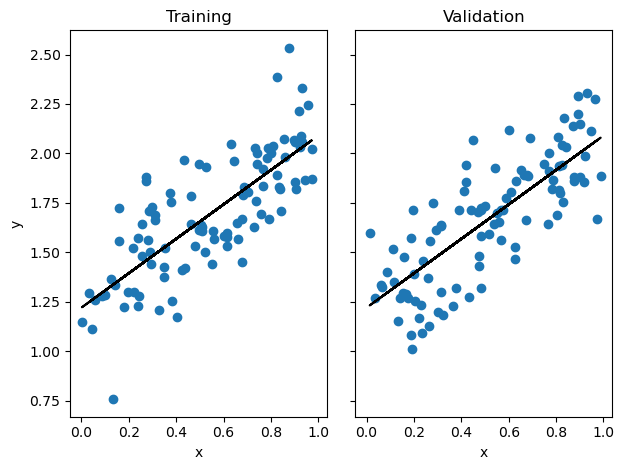

Before testing my two fitting techniques, I have to generate some data. I have generated both training and validation data, visualized below. We can see the two datasets exhibit similar trends, so my model trained on the training data, should generalize to the validation data relatively well.

n_train = 100

n_val = 100

p_features = 1

noise = 0.2

# create some data

X_train, y_train, X_val, y_val = LR_data(n_train, n_val, p_features, noise)

# plot it

fig, axarr = plt.subplots(1, 2, sharex = True, sharey = True)

axarr[0].scatter(X_train, y_train)

axarr[1].scatter(X_val, y_val)

labs = axarr[0].set(title = "Training", xlabel = "x", ylabel = "y")

labs = axarr[1].set(title = "Validation", xlabel = "x")

plt.tight_layout()

Next I import the model and test it, first fitting the data using the analytical technique desribed above. When we print the accuracies, we see a training score of 0.59 and a validation score of 0.62. The model actually performs better on the validation data! This is encouraging, as it means we are likely not overfitting.

from linear_regression import LinearRegression

LR = LinearRegression()

LR.fit(X_train, y_train) # I used the analytical formula as my default fit method

print(f"Training score = {LR.score(X_train, y_train).round(4)}")

print(f"Validation score = {LR.score(X_val, y_val).round(4)}")Training score = 0.5944

Validation score = 0.6242We can visualize the results of our model, and we see that it does a good job of drawing a line that reflects trends in the data.

# plot it

fig, axarr = plt.subplots(1, 2, sharex = True, sharey = True)

axarr[0].scatter(X_train, y_train)

axarr[0].plot(X_train, LR.predict(X_train), color = "black")

axarr[1].scatter(X_val, y_val)

axarr[1].plot(X_val, LR.predict(X_val), color = "black")

labs = axarr[0].set(title = "Training", xlabel = "x", ylabel = "y")

labs = axarr[1].set(title = "Validation", xlabel = "x")

plt.tight_layout()

When we print the weights of the model fit using analytical methods and compare it to the model weights when using gradient descent, we see that we get the same weights, as we would expect. If we trained the gradient descent model on more epochs, we would likely see even more agreement in the later decimal places.

LR.warray([1.09501024, 0.88755776])LR2 = LinearRegression()

LR2.fit(X_train, y_train, method = "gradient", alpha = 0.01, max_epochs = 1e2)

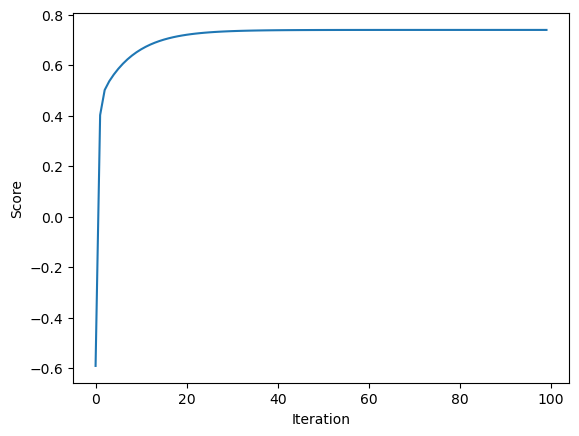

LR2.warray([1.09428466, 0.88794095])We can also plot the score history of the gradient descent model and we see an initial steep increase in the score, as expected, before plateauing around 0.7 after about 20 iterations.

plt.plot(LR2.score_history)

labels = plt.gca().set(xlabel = "Iteration", ylabel = "Score")

Experimenting with Number of Features

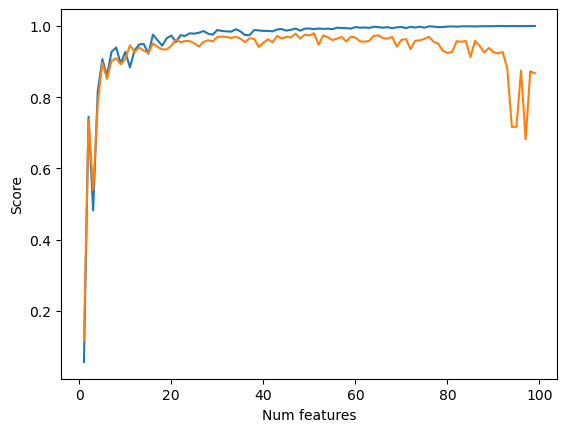

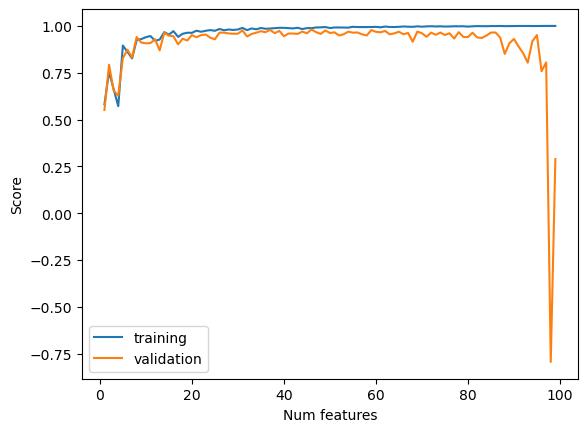

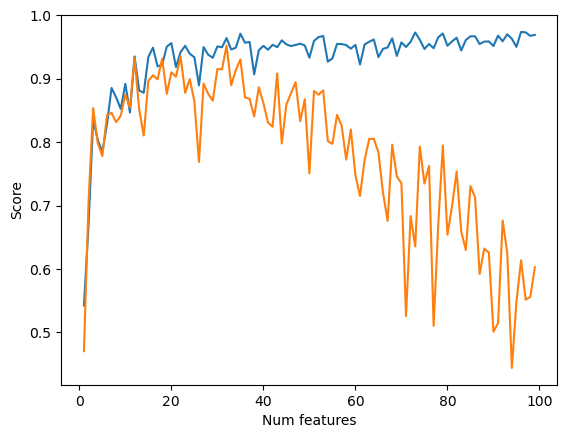

Next we increase the number of features and see how this impacts model performance on both training and validation data. We see that as we increase the number of features, the training score also increases before plateauing around 1.0, the highest possible value. The validation score initially increases as well, but then sharply declines to values below zero, indicating very poor performance, as the number of features approaches the number of data points. This is due to overfitting. As we increase the number of features to get closer to the number of data points, the model has a difficult time generalizing and extracting patterns because there is not enough data. This negatively impacts the validation score because rather than extracting general patterns, the model simply learns the exact behavior of the training dataset.

n_train = 100

n_val = 100

noise = 0.2

train_scores = []

val_scores = []

LR3 = LinearRegression()

for i in range(1,n_train):

p_features = i

X_train, y_train, X_val, y_val = LR_data(n_train, n_val, p_features, noise)

LR3.fit(X_train, y_train)

train_scores.append(LR3.score(X_train, y_train).round(4))

val_scores.append(LR3.score(X_val, y_val).round(4))

plt.plot(np.arange(1,n_train),train_scores, label = "training")

plt.plot(np.arange(1,n_train),val_scores, label = "validation")

labels = plt.gca().set(xlabel = "Num features", ylabel = "Score")

legend = plt.legend()

To combat this, we utilize LASSO regularization. LASSO regularization constrains the weight vector to be small, forcing many weights to be zero. This minimizes the effects of overfitting because it constrains the number of features that actually contribute to the model’s final predictions. When we employ LASSO regularization with an alpha of 0.001 we see a dip in later validation scores, but it is much smaller, dipping from about 1.0 to 0.8, rather than falling to almost -0.75.

from sklearn.linear_model import Lasso

n_train = 100

n_val = 100

noise = 0.2

train_scores = []

val_scores = []

L = Lasso(alpha = 0.001)

for i in range(1,n_train):

p_features = i

X_train, y_train, X_val, y_val = LR_data(n_train, n_val, p_features, noise)

L.fit(X_train, y_train)

train_scores.append(L.score(X_train, y_train).round(4))

val_scores.append(L.score(X_val, y_val).round(4))

plt.plot(np.arange(1,n_train),train_scores, label = "training")

plt.plot(np.arange(1,n_train),val_scores, label = "validation")

labels = plt.gca().set(xlabel = "Num features", ylabel = "Score")

legend = plt.legend()

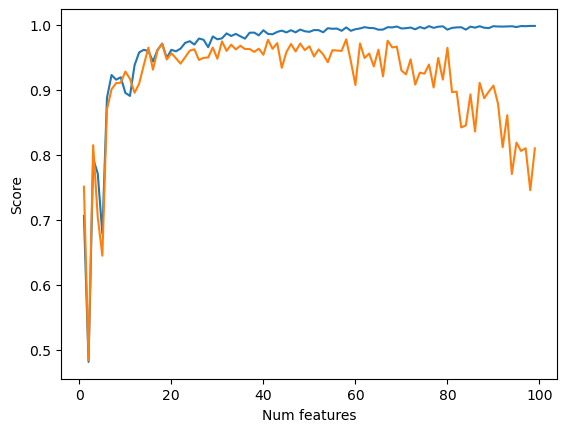

Experimenting with different alpha values, we see that when we increase alpha to 0.01 the score dips further. When we decrease alpha to 0.0001, we see similar behavior to our original alpha value of 0.001, with a slightly smaller dip.

from sklearn.linear_model import Lasso

n_train = 100

n_val = 100

noise = 0.2

train_scores = []

val_scores = []

L = Lasso(alpha = 0.01)

for i in range(1,n_train):

p_features = i

X_train, y_train, X_val, y_val = LR_data(n_train, n_val, p_features, noise)

L.fit(X_train, y_train)

train_scores.append(L.score(X_train, y_train).round(4))

val_scores.append(L.score(X_val, y_val).round(4))

plt.plot(np.arange(1,n_train),train_scores, label = "training")

plt.plot(np.arange(1,n_train),val_scores, label = "validation")

labels = plt.gca().set(xlabel = "Num features", ylabel = "Score")

legend = plt.legend()

from sklearn.linear_model import Lasso

n_train = 100

n_val = 100

noise = 0.2

train_scores = []

val_scores = []

L = Lasso(alpha = 0.0001)

for i in range(1,n_train):

p_features = i

X_train, y_train, X_val, y_val = LR_data(n_train, n_val, p_features, noise)

L.fit(X_train, y_train)

train_scores.append(L.score(X_train, y_train).round(4))

val_scores.append(L.score(X_val, y_val).round(4))

plt.plot(np.arange(1,n_train),train_scores, label = "training")

plt.plot(np.arange(1,n_train),val_scores, label = "validation")

labels = plt.gca().set(xlabel = "Num features", ylabel = "Score")

legend = plt.legend()/Users/elizawieman/opt/anaconda3/envs/ml-0451/lib/python3.9/site-packages/sklearn/linear_model/_coordinate_descent.py:631: ConvergenceWarning: Objective did not converge. You might want to increase the number of iterations, check the scale of the features or consider increasing regularisation. Duality gap: 1.029e-01, tolerance: 4.254e-02

model = cd_fast.enet_coordinate_descent(